Validation, implementation, and maintenance are major components of the research and development that goes into creating useful tests. Advances in the field of psychometrics such as Item Response Theory (IRT) allow for more accurate assessment of test takers.

What is IRT?

IRT represents a general framework for understanding the relationship between an individual’s standing on an underlying factor (e.g., a particular skill, ability, or trait) and their responses to assessment items designed to measure that underlying factor.

Given a set of items with known characteristics and a set of responses to those items, IRT permits the calculation of a score that estimates the individual’s true standing on the underlying factor of interest.

IRT vs. CTT Scoring

All else being equal, assessments scored using IRT possess many advantages over other tests that rely on more traditional scoring approaches, such as those rooted in Classical Test Theory (CTT).

CTT calculates an individual’s score simply by using the number correct. That is, one compares an individual’s responses on items to a scoring key. IRT, on the other hand, uses the item characteristics and the pattern of responses to items to provide a more accurate estimate of the underlying factor.

IRT conveys many benefits over CTT by using scoring algorithms that factor in the difficulty of the item, how well the item distinguishes between individuals with low and high amounts of the underlying factor, and the likelihood of answering the item correctly by guessing.

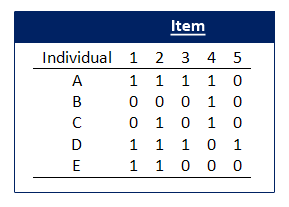

Here’s an example. The table below presents item scores (0 = incorrect, 1 = correct) on a five-item test given to five people (A, B, C, D, and E). Person A, for example, answered items 1, 2, 3, and 4 correctly and answered item 5 incorrectly.

Based on the information provided in the table, who has the greatest apparent ability on this five-item test?

Most people would say that individuals A and D have the greatest apparent ability.

In terms of CTT, that would be the correct answer: Both Individual A and D answered 4 out of 5 test items correctly. However, as I mentioned above, we do not always treat items equally when using IRT. For example, what if item 5 was substantially more difficult than item 4? If so, we might estimate Individual D’s ability to be greater than Individual A’s ability.

IRT is just one of the many tools that our R&D team employs to create our cutting-edge and proven assessments.